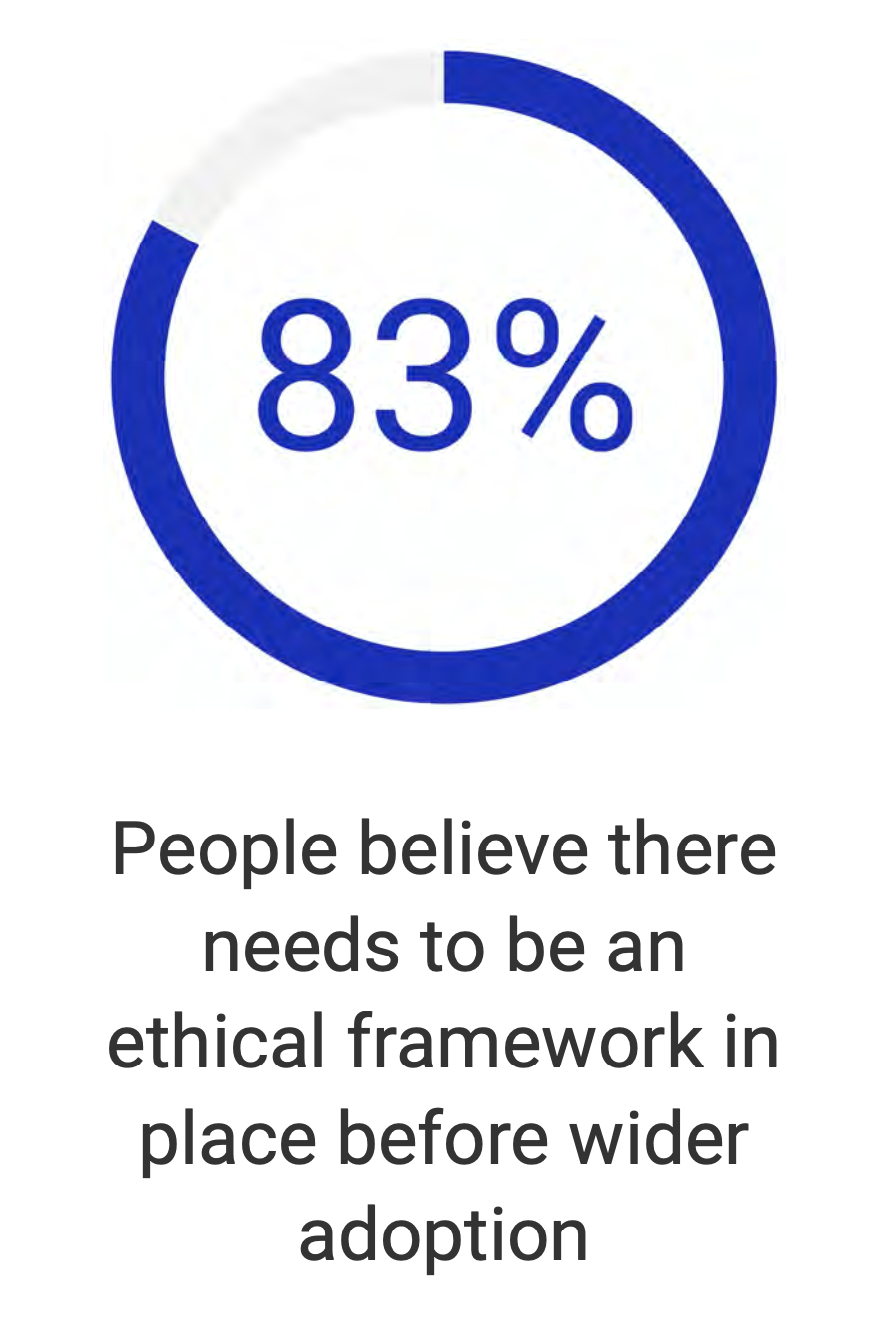

As we move into 2020, artificial intelligence usage is becoming commonplace throughout society. In many cases, AI is creating a positive impact in the world, for instance helping to identify breast cancer more accurately, track elephant populations and may even help find a cure for COVID-19. At the same time, AI requires an exceptional amount of data and forces individuals, organizations and governments to ask major ethical questions, let alone struggle with ways in which ethical behaviors can be measured or enforced.

Citizens must accept (sometimes unintentionally) that Google and Alexa listen to everything. While these companies find value in the aggregate data, there are real downsides for the individual (e.g. personal privacy implications of adding your face into a database of 100 million faces). On top of that, large companies such as Facebook, Google and Marriott are losing, breaching or struggling with ethical data and privacy issues on a daily basis. Suffice to say, we are facing massive societal data issues with both short-term and long-term implications.

In “The State of Artificial Intelligence in the Nonprofit Sector” report, we noted that nonprofits serve an economic area unfulfilled by government or business. Nonprofits represent underserved or underrepresented individuals and communities. At a time when 81% of U.S. adults believe they have little or no control over their personal data, AI in the nonprofit community is distinct from the world of business or government. In this article, we have teamed up with the experts at the Futurus Group to dive deep into the topic of AI ethics.

Download the full article here!

Free Resource: have you downloaded the SAINS report yet? It’s the most comprehensive analysis of artificial intelligence for social good available.

Recent Comments